Talking About AI Progress (March 2023)

Talking About AI Progress (March 2023)

I have been wanting to write this one since many weeks, but then I decided I would write a single long post about the stuff happened in March 2023 related to AI.

March 2023 was truly insane, seriously crazy. I have never seen progress like this happening in my entire life. Like all records were broken. But funny because it is still not singularity. And just the third month of 2023. I thought February was mind blowing before but March made it look like nothing. It's April 2nd right now and I'm not sure if this month will beat what happened in March. So let's start from the beginning. I will review a lot of stuff.

The month started with OpenAI releasing ChatGPT and Whisper APIs. This itself was crazy because now people can use these APIs on their sites and apps and deliver ChatGPT like experience. Pretty sure by now thousands of apps already incorporated it. Although I really hate the cold responses from ChatGPT and its restrictions, it was a great step nonetheless. OpenAI still didn't release the customization option for ChatGPT. But I'm sure this API release will bring a significant disruption in a lot of Fields dealing with customer support.

This month was crazy for the field of Robotics. I truly consider it the most important. I mean AI is great and all but it will truly become impressive when I start seeing robots in the Real World that people interact with like they interact with their smartphones. Imagine having a robot pet or an Android like 2B from Nier Automata. Waifu. Lmao. We are still very far away from something like that though.

Figure Robotics is a new startup that has a mission to commerciaize Humanoid Robotics. They showed nothing, it's basically their beginning. But I'm sure Tesla will probably be the king of Humanoid Robotics or Microsoft/Google. Microsoft because of their investment with OpenAI. There were multiple updates related to Robotics this month other than this.

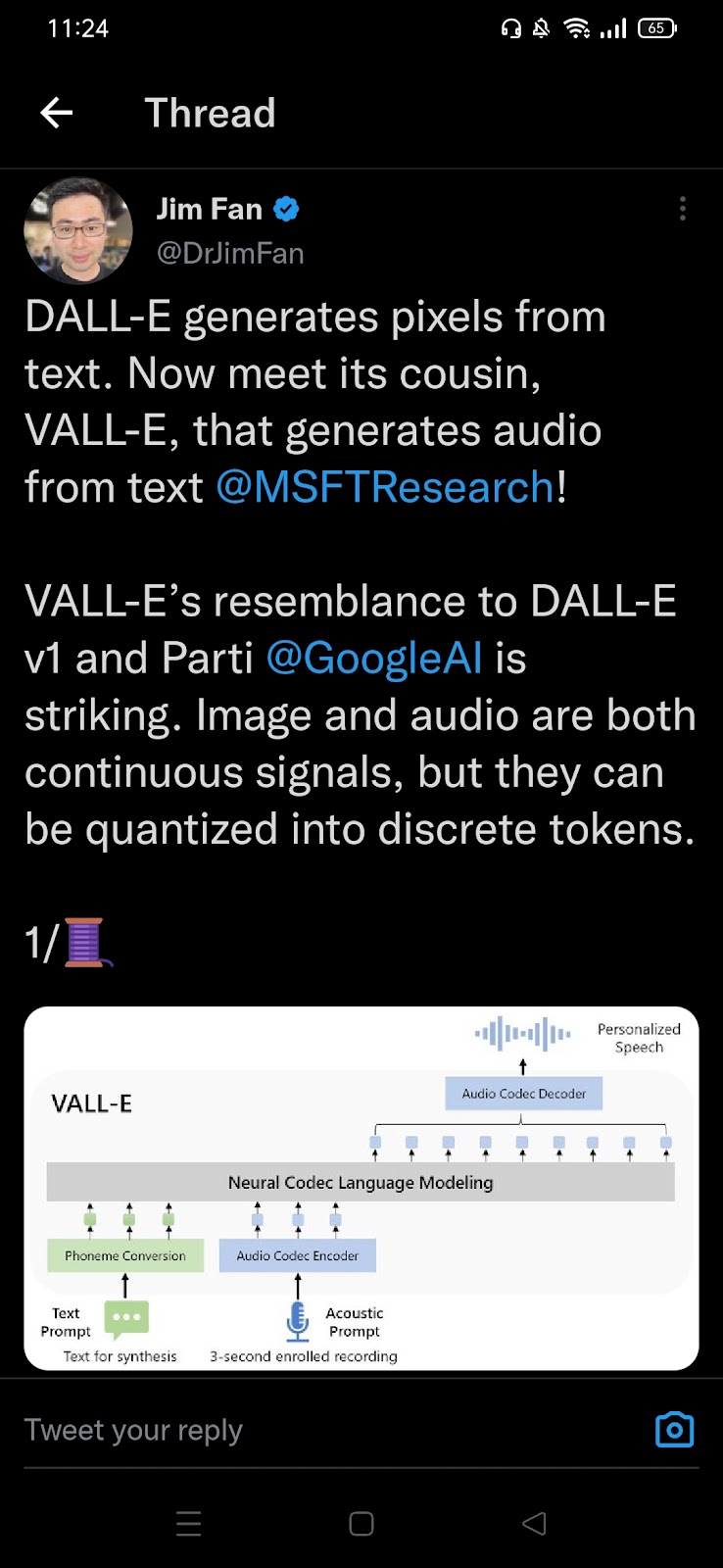

The Stable Diffusion moment for Large Language Models started this month when Meta's LLaMA LLMs got leaked on 4Chan. Just after a few days people started tinkering with it, optimising it. People started running it on their Macs, smartphones, even Raspberry Pi. A lot of Fine-tuning also happened.

We saw that OpenAI is alpha testing their upcoming text to image model DALL-E 2 Experimental. I thought it is DALL-E 3 at first. But they won't be able to beat Midjourney or Stable Diffusion tbh.

This month was amazing for multimodal AI's. First Google showed PaLM-E which was truly mind blowing. Then Microsoft showed Prismer.

Wait wait... I think writing it like this is boring. Let my change my style here. I am just gonna talk randomly now.

Remember last month and before when I said that OpenAI will soon release GPT-4? Well it happened. GPT-4 was launched March 14. And a week before it was revealed by a Microsoft employee so people knew it would launch. And it is a Multimodal LLM. Text plus vision. Able to be in top 10 of almost every test and better than so many humans. It's mind blowing. And of course it's vision capability which tbh we still haven't seen yet properly. In the demo it was showed that we can show it a rough drawing and it can turn it into a working website and it can explain Memes etc. And 32k context window is amazing. But we haven't seen that either.

If I be very honest. GPT-4 is amazing but we still haven't seen it's true release and capabilities. Currently only paying people can access it on ChatGPT Plus. And it has restrictions too. Like 25 messages per 3 hours something like that. I think OpenAI is facing GPU shortage right now.

GPT-4 is amazing but I haven't used it so I'm not really impressed by it. But in a way I have used it by using Microsoft Bing AI or Sydney. It was confirmed that Bing AI uses GPT-4. But it's not the same thing in a way. Bing AI is heavily restricted now.

I don't know how many months it will take for OpenAI to release GPT-4 for everyone on ChatGPT and when we will see 32k context window with vision capabilities. If I have to guess, most likely 3 or more months. I doubt they can ship this fast. When I'll use everything GPT-4 Can offer then I can truly judge it. Right now I'm not that impressed.

Microsoft also showed that Microsoft Office Copilot is coming soon and what it can do. Now this was truly mind blowing and it will be powered by GPT-4 too. Still not release date but this is going to be really disruptive for the jobs that uses Microsoft Office tools. Imagine making PPTs within seconds and writing stuff. I can't believe it is happening. So many people will lose jobs because of this. But the sooner the better. This will affect me too because my work is based on Digital Media.

I know my job won't last for long.

Google also showed their Workspace AI integration. They will ship AI to Gmail, Docs, Slides, Sheets, etc. as well. But their demo was massively underwhelming. Looked like a joke in front of Microsoft. Google also released Bard for public testing but it got very bad feedback. Again looked like a joke in front of Bing AI which is powered by GPT-4. Bard is currently powered by LaMDA (small model). Google said that they will soon upgrade it with better models and maybe with PaLM models too. I have access to Bard but I didn't even use it lol.

Google is honestly in a bad position right now. Everything they are showing is getting bad reviews. They used to be the leader but OpenAI is now the king with Microsoft partnership. I read a few days ago that Google Brain and Deepmind is working together now to create a powerful bot. I think to compete with ChatGPT and Bing AI. Let's see how it goes.

AnthropicAI's Claude got a lot of integration with different apps and sites this month. They opened waitlist to their API access. And I even used it on a site, but it is underwhelming compared to OpenAI's GPT series.

I have noticed that a lot of LLMs got intergated with apps that are based on productivity, like with Slack. And with Microsoft Office Copilot and Google Workspace AI tools, the disruption is definitely coming. In a few years people will use AI tools more for work. Wait I think months.

One another thing that was amazing to see was NeRF developments. Like I saw dozens and dozens of updates related to NeRF this month. They are improving at an exponential rate. Soon it will be possible to record what is happening in our life and experience it virtually in the future whenever we want. And of course it's great for 3D development and gaming.

Midjourney v5 launched this month. Other than GPT-4, I think this was the best release. Like this is currently the best text to image model available. It surpasses Stable Diffusion easily. But SD is better if we do advanced prompting and stuff but most people can't access it easily. Midjourney v5 takes photorealism to another level. The level of details it currently provides is crazy. I knew this would happen someday but seeing it is just something else. Of course I don't have the subscription, but seeing Images created by other people. Wow. Truly insane. If this is v5, I can't imagine v6 of Midjourney in future. I also know that there is a lot of room for improvements but the next version will be mind boggling. I'm so excited for the future. It was funny seeing so many pictures getting viral that were Midjourney v5 Generated. Especially Pope's picture.

OpenAI released a report of jobs that will be most affected with GPTs. And it was showed that people who have degrees have a way higher chance of seeing disruption and especially my field of Journalism and Mass Communication lol. It's crazy I am graduating soon and this is happening. Pretty sure by the end of this year, millions of jobs will simply vanish all over the world. I mean this is only the third month.

The first text to video model was released this month which is open sourced, Modelscope's text to video model. It is basically in the DALL-E Mini era. Very funny outputs but the videos people created were very memeable. Just like what was happening with DALL-E Mini about a year ago. Less than a year ago actually. Crazy how fast things are progressing.

Runway also showed their upcoming text to video model Gen-2, the quality is much better than Modelscope but it still hasn't released and obviously won't be open sourced. But the examples I have seen currently are crazy.

I don't know the future right now, but when text to video models will mature like Midjourney v5 or Stable Diffusion. Man it's gonna be something else. Unlimited entertainment. And a lot of possibilities. I know I will see this in future. I hope the future is mind blowing, I am so excited to see what is coming in the coming months and years.

LAION released and open sourced their Open Assistant chatbot. Currently in alpha but still amazing. Sadly it didn't get much attention as people are loving LLaMA more.

Man there is just a lot to cover up and I'm getting tired now. I should have updated this weekly. So I am skipping now.

But the last thing I want to mention is that AI is getting more general now. And some people are thinking AGI is here now. In fact I believe now that AGI may be here by 2025-2030. As soon as 2025. There have been multiple updates related to this and I have bookmarked It. But i think soon we will have something related to General AI released to the public like HuggingGPT where people will be able to use Huggingface models using ChatGPT. That's not AGI but a good start. Proto AGI for some.

Totally forgot to mention but another great thing was ChatGPT Plugins. This is another level. ChatGPT itself can't do much but Plugins changes everything. I see my dream of having a JARVIS like assistant coming soon. Soon I'll do a lot of things just by talking to my Chatbot. Like sending mails, downloading things, editing Pictures and generating, asking questions that can't be answered yet, and so on.

If AGI really comes by 2025 and helps us in advancing technology, things will get insanely crazy. My dreams are coming true.

That's it for now, I should update weekly now. Future me I know you are reading this, I don't know what April 2023 and beyond will bring but I'm excited. I hope the Singularity arrives soon.

Comments

Post a Comment